Consciousness, Robots, and DNA

This is a paper to appear in the Proceedings of the International Congress for Basic Science, based on my talk in Beijing on July 17, 2023. The talk explores the complex interplay between consciousness, robotics, and DNA using the insights of artificial intelligence, neuroscience and physics. Specifically, I discuss the evolution of language models and the quest for human-level AI, the scientific dimensions of consciousness, the elusive nature of "now" in consciousness, and the enigma of so-called cat-states in quantum theory. Finally, we examine the role of DNA replication as a possible generator of cat-states and its implications for understanding consciousness, free will, and the nature of reality. I hope to encourage further exploration at the intersection of these fields, highlighting the profound questions they raise about the nature of consciousness in both biological and artificial systems.

I would like to thank Professor S. T. Yau and the Beijing Institute for Mathematical Science and its Applications (BIMSA) for their invitation to address this inaugural congress. This is a wonderful opportunity for Eastern and Western mathematical scientists to exchange their ideas. My talk is based on Chapters 9, 10 and 14 of my recent book "Numbers and the World, Essays on Math and Beyond". I hope it will raise interest in several enigmas that raise philosophical questions as well as scientific ones. Full references, many figures and many further aspects of these issues are discussed in that book.

1. Exploring the Evolution of Language Models and the Possibility of Conscious AI

The basic learning algorithm used in "deep learning" was devised many decades ago but was long considered useful only in highly constrained tasks like reading hand-written zip codes. In the 2010's however, two things happened: first, the widespread use of computers generated huge datasets of text and images; and second, it was realized that the graphical processing units present in all computers could be adapted to hugely speed up the deep learning algorithm. All of a sudden, deep learning could be used with data many orders of magnitude larger than previously and the learning process could be implemented on much faster computers. This, plus the invention of an extension of deep learning called "transformers", led to amazing successes. Speech and face recognition began finally to work at a useful level of accuracy and much more ambitiously, language models learned to generate coherent, contextually relevant responses to questions on virtually any topic. This is best known in the series of GPT (Generative Pre-training Transformer) programs which stunned the world in their seemingly human-like responses to most queries. These programs take trillions of words as their data and then train billions of parameters embedded in fairly simple computer code.

But GPT lacks any embodiment in the real world -- senses or robotic actuators. Despite their remarkable linguistic proficiency, these models exhibit a form of cognitive limitation akin to a quadriplegic blind individual. Note that Helen Keller, though blind and deaf, retained touch and muscles and became a brilliant writer. But GPT lacks the ability to directly perceive and interact with the actual external world beyond its vast textual input. As a result, it generates answers being called "hallucinations" or, simply put, it makes stuff up. For GPT, Santa Claus might as well as exist as not.

1.1 AI's Progress Beyond Language

The expected evolution of AI involves transcending the confines of language processing by integrating both sensory inputs and motor capabilities. This paradigm shift entails linking the language model with systems that interpret signals from the real world, providing the AI with a platform to learn cause-and-effect relationships. The incorporation of transformer-based architectures holds promise in bridging the gap between language-based understanding and sensory/motor interaction. A major difficulty here is providing large enough datasets in which the computer interacts with the world, which are needed for deep learning training. I think it is likely that experiments will use virtual worlds in order to create orders of magnitude more pseudo-world training data. More simply, continuous exposure to diverse experiences through ongoing total access to the internet should create a sense of discernment giving tools to the language model helping it distinguish truth from fictional constructs. Additionally, the inclusion of reinforcement learning mechanisms becomes essential, optimizing the AI's skills in virtual or physical domains.

Google is not resting on its laurels. After my talk at BIMSA, I found in the arXiv multiple papers by their group implementing exactly what I had hoped for. Their program ViT (Vision Transformers) extends the GPT architecture to visual input. Interestingly, to do so they devise a version of saccades to create linear sets of transformers relating distinct image patches as an alternative to sentences breaking into words. More recently, they have published a description of the programs PaLM-E (Pathways Language Model -- Embodiment) and rt-2 (Robot Transformer) which incorporates limited movements along with vision, rather similar to the old MIT program called "Blocks World", but a world apart in its potential. Robots performing fluid movements and adapting to diverse grasping motions begins to look likely in a few years.

1.2. Exploring Radical Transformations

In contemplating more radical advances, a further step is programming AI models to "see" and "speak" with actual people, facilitating a form of acquaintance with humanity. Imagine a lab with a robot which roams around the halls and offices and talks to the human researchers it encounters, asking them what they are working on, whether they (the robot) can help. To do this, it must build a model of each person's jobs, personality, history. Of course, the robot does not contain its computer: it is communicating electronically with the mainframe in the lab, sending and receiving data. The objective is to foster mutual interactions, probably leading to anthropomorphism as users begin to perceive the AI as a seemingly conscious entity. Humans have no problem anthropomorphizing their pets and children do the same with stuffed animals. All this raises profound questions about the potential awareness and consciousness of the enhanced AI program. Once the AI is "embodied" in the real world, it will have agency and it must be given objectives, e.g. helping people. This is what scares the pants off of pessimists who recall Goethe's poem about how the Sorcerer's Apprentice ran amuk. Will the robot develop self-awareness, and to what extent can it emulate human-like urges and emotions?

I would just like to point out that what drove animal evolution and human conflict is the deep seated drive for survival and for the power to control their environment, but there is no need to translate this into the AI's code. Why should a machine be concerned with self-preservation unless told to do so? Instead teach it the Hippocratic oath, "do no harm". On the other hand, the creations of Silicon Valley have all been perverted by criminals to trick, subvert and impoverish naive users. So one must assume that criminals or criminal regimes will attempt to misuse robots and they will embed an urge to control humans in various ways in the robot's code if they can. This is my worry.

I may be wrong, but I see no reason to expect an AI to exhibit any emotions at all unless it has been programmed to have such things. For that matter, even though human emotions are such a big part of our consciousness, they are still very poorly understood. I tried to summarize some of this research in Chapter 10.3 of my book but I was surprised at how tentative it still is. But certainly, the exploration of these issues delves into the mystery of AI consciousness, prompting inquiries into the nature of their subjective experience and the ethical implications of human-AI relationships.

2. Unraveling the Scientific Dimensions of Consciousness

The exploration of consciousness stands at the crossroads of philosophy, neuroscience, and artificial intelligence, challenging us to analyze what it means to be conscious. The historical perspective on consciousness reveals a shift from naively associating it with voluntary movement or with breath (atma, meaning breath in Sanskrit, is the Hindu essence of life) to contemporary studies using advanced techniques such as brain recordings. The latter date from Penfield's amazing records of human experiences during cortical stimulation of awake patients on the operating table (to identify the focal area of their epilepsy). Notably, the taboo surrounding the discussion of consciousness in neuroscientific circles was challenged by John Eccles and Karl Popper in their groundbreaking work, "The Self and its Brain," published in 1977. This daring endeavor opened the door to a new era of scientific inquiry into consciousness, marked now by dedicated conferences and journals. Today, the question of whether a robot can possess consciousness is regarded as a legitimate scientific inquiry.

2.1. Locating Consciousness

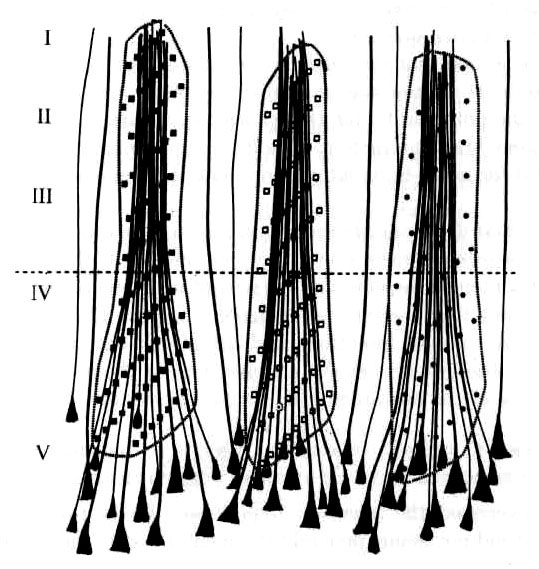

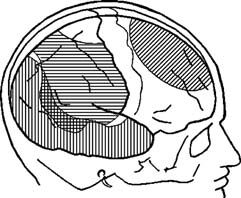

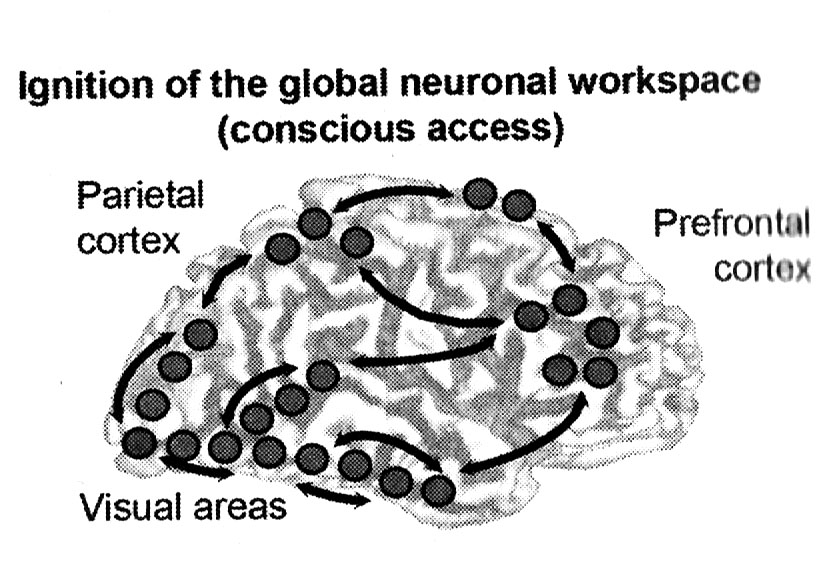

The quest to locate consciousness within the intricacies of the brain has generated diverse theories and controversies. Eccles and Popper postulated a direct correlation between bundles of pyramidal cells, particularly in the prefrontal lobe, and components of thought (figure 1, left). Conversely, the findings of Penfield and Merker, observing minimal effects on consciousness after major cortical excisions (in surgery to control epilepsy, figure 1, middle), proposed an origin of consciousness in the midbrain. Building upon the legacies of Freud, contemporary neuroscientists like Stanislas Dehaene challenge traditional notions by uncovering that much of human cognition, including reasoning, occurs {\it unconsciously.} The proposal that \(\gamma\)-oscillation-based synchrony, pervasive in the brain, gives rise to conscious thoughts shifts the focus from the activity of single or small sets of neurons to joint activity of large parts of the neocortex as the neural mechanism underlying consciousness (the global workplace theory, figure 1, right).

3. The Enigma of "Now" in Consciousness and Relativity

The concept of "now" holds a unique place in the tapestry of consciousness, representing the immediate awareness of living each moment while our memory of the past continuously increases and the unknowns of the future gradually reveal themselves. We struggle to understand how this trip down the river of time started when we were born and will end (or will it?) when we die. The question of awareness in fully intelligent robots shifts this conversation from the religious plane to a more immediate, pressing, arguably scientific issue.

3.1 The Elusive Nature of "Now"

Consciousness, characterized by awareness, memory, and the experiential immediacy of the present moment, finds itself in a paradoxical relationship with the principles of physics. In the realm of physics, the pursuit of patterns valid uniformly in all parts of space-time has no need for the concept of a subjective "now." The division of time into past, present, and future is irrelevant in physics but, becomes a central object of contemplation in all religions, for example in Buddhist meditation and in Saint Augustine's Confessions, Book 11. Although useful for hyperbolic initial value problems, the choice of the initial time point is quite arbitrary.

Newton had written "Absolute, true and mathematical time, of itself and from its own nature, flows equably without regard to anything external". Space and time were two distinct continua and the concept of simultaneity was obvious. Einstein's theory of relativity shattered the conventional understanding of time by abandoning the concept of simultaneity, destroying forever the notion of a universal "now". It joins the theories of a flat earth and the geocentric model of the solar system as major misconceptions of the true universe by naive humanity. Although now the discrepancies between clocks in satellites and clocks on earth are mere microseconds, the further we explore in the universe, the more these discrepancies between clocks will grow and it is likely that parents in whose lives there is prolonged acceleration in space ships or who dive close to black holes will then outlive their great grandchildren. There will be NO way to fix a universal time for mankind. A person's subjective time is simply the integral of the space-time Minkowski metric along his or her space-time trajectory and is a separate time for each person.

The clash between Einstein and philosopher Bergson in 1922 epitomized this tension. While Einstein envisioned life as a trajectory in 4D space-time, Bergson, influenced by his introspective and philosophical analysis of consciousness, rejected the relativity framework. Curiously, though, Einstein, grappling with the subjective experience of the "now," ultimately acknowledged the limits of science in capturing the essence of this aspect of the temporal dimension. Towards the end of his life, he is reported by Rudolph Carnap as saying "The experience of Now means something special for man, something essentially different from the past and the future, but that this important difference does not and cannot occur within physics ... there is something special about the Now which is just outside of the realm of science."

Curiously, Bergson is not the only voice clinging to a universal time. Cosmologists have insisted on this too. Although starting from general relativity, their so-called "standard model" introduces some doubtful axioms that lead to a space-time metric which splits conveniently into a global product of space and time and a metric with no cross terms but only a time varying spatial expansion/contraction coefficient. My sense is that the data from the Webb telescope is finally repudiating this simplistic model.

3.2 The Timeline of a Robot

In the context of artificial intelligence and robotics, the understanding of "now" takes a divergent path. Unlike human consciousness, robots can be powered on and off, with their computations and sensors dispersed across server clusters and physical bodies. The awareness in robots, whatever form it takes, does not adhere to a single, continuous path but rather manifests in a time-directed mesh net, possibly exhibiting a non-continuous nature. All robots will likely use parallel processing and then be unable to fix a timeline of their thoughts at the microsecond level. Neuroscientists, engaged in a parallel inquiry into human awareness, find themselves without a consensus on the localization of consciousness within the brain, so maybe this too is a mesh and not a simple one dimensional trajectory. In any case, mental processing is much slower, occurring more or less at hundreds of milliseconds speed (bats are a startling exception, distinguishing echos separated by mere microseconds).

The entwined nature of consciousness and the temporal dimension remains an enigma, with physics challenging the very concept of an absolute "now." As debates persist among scientists and philosophers, the exploration of consciousness in both human and artificial entities opens new frontiers of inquiry.

4. Quantum Theory, the Enigma of Cat-States and DNA Replication

In the last part of my talk, I want to discuss the role of conscious beings in the foundations of quantum theory. The problem here has nothing to do with time but with the merging of deterministic classical physics with the non-deterministic quantum description of the atomic world. This merging is needed in describing the act of measuring an atomic event with the aid of some device that amplifies events involving interacting elementary particles into something the physicist can see with his or her own eyes. I will also propose that DNA replication can be viewed as such an amplifying device and that this brings quantum reality much closer to our world than is usually believed.

4.1 The Quantum Enigma: Measurement and Cat-States

Let's recall that quantum theory is based on a so-called wave function \(\phi\), an element in a complex Hilbert space \(\mathcal H\) of norm 1 defined up to multiplication by some scalar \(e^{i\theta}\). \(\phi\) is not directly observable but evolves by the deterministic equation of Schr{\" o}dinger. Measurable things are described by Hermitian operators \(X\) on \(\mathcal H\) in the following sense: if \(\phi$ lies in the eigenspace \(X_a\) of \(X\) with eigenvalue \(a\), then the measurement corresponding to \(X\) must give the value \(a\). But what happens if \(\phi\) is not in any eigenspace? \(\phi\) can be decomposed as the integral of its projections to \(X\)'s eigenspaces. More usefully, the norm squared of these projections defines a measure on the spectrum of \(X\) whose value on a subset \(U\) is the probability that the measured value of \(X\) will be in \(U\). The eigenvalue of \(X\) has a mean under this measure and a standard deviation quantifying your uncertainty. Finally, after a measurement determines that the value is in \(U\), \(\phi\) must be {\it reset} to its projection \(p_U(\phi)\) on the corresponding eigenspaces. This is called the "collapse of the wave form".

Immediately we have a problem: collapsing the wave form after a measurement clearly means that \(\phi\) is describing what you know, not what is actually "there". In other words, \(\phi\) is epistemic thing, not an ontological thing. But on the other hand, long calculations on \(\phi\)'s allow accurate predictions of complex particle interactions and even the chemical properties of complex molecules. It is hard to come to terms with these successes without thinking of \(\phi\) as ontological, describing something "really there". This is the central dilemma at the heart of quantum theory, the problem that Feynman often said no one really understood. The indeterminacy of measurements is also an issue. In ordinary life, outside physics labs, things tend to be predictable. Sure there is the unpredictable nature of weather but we are pretty sure we know the PDE's that can, in principle, predict it and the issue is that of large Lyapunov exponents. Your toothbrush is where you left it last night and if you think stuff is acting mysteriously, you may need to see a psychiatrist. Let's make the seeming determinacy of macroscopic life precise. First make a list of the (bounded) Hermitian operators \(\{X_i\}\) defining observations we can make with human senses alone and second, specify tolerances describing how small a change will be noticeable to our senses. Then the determinacy of the macroscopic world can be expressed reasonably well by requiring that the standard deviation of each \(X_i\) should less than the corresponding tolerance \(\epsilon_i\). Expressed in formulas, this means: $$ \frac{\langle X_i^2 \phi, \phi \rangle}{\|\phi\|^2} -\left( \frac{\langle X_i \phi, \phi \rangle}{\|\phi\|^2}\right)^2 < \epsilon_i^2$$ This requirement defines an open set \(\mathcal{H}_{AMU} \subset \mathcal{H}\) with a screwy shape defined by 4th order inequalities, a set that I call the "almost macroscopically unique" (AMU) quantum states. Its complement \(\mathcal{H}_{CAT}\) are referred to as "cat-states" and are states {\it where there is visible indeterminacy}. The archetypal cat-state was described by Schr{\" o}dinger as the result of locking a cat in a sealed box for a minute with some radioactive material that triggers the release of cyanide when the material emits an alpha particle. After a minute, the state \(\phi\) describing alive or dead is a weighted sum of an alive cat state and a dead cat state, \(p\cdot\phi_\text{alive} + (1-p)\cdot\phi_\text{dead}\). Lots of question arise: was the cat really {\it neither alive nor dead} but in a superposition a second before the box is opened? What happens if a robot opens the box?

4.2 Physicists' Fudges and the Dilemma

Physicists have not reached any consensus on how to deal with this dilemma. Heisenberg took an epistemic stance saying at one point "The natural laws, formulated mathematically in quantum theory, no longer deal with the elementary particles themselves but with our knowledge of them." Another group has sought "hidden variables" that evolve deterministically but their proposals have not incorporated the extra complexities of quantum field theory. And then we have the "multiversers" who say the universe splits into many copies of itself, one for each value of the indeterminate measurement. As far as I'm concerned, I've lived one life and I find the idea that there are millions of me living other lives seems a bit silly. By and large, physicists hate the idea that consciousness has anything to do with physics and want to place the "Heisenberg cut", where the indeterminacy is resolved, somewhere in the middle of the measuring process. The epistemic group now cloths the subjective aspect of measurements in the math of information theory which is more comfortable for scientists. Information theory introduces the concept of \(\phi\) as information without specifying its source. I discuss these multiple approaches to the dilemma of measurements further in my book, Ch.11.1. The fundamental question of where and how the Heisenberg cut occurs remains elusive.

4.3 The Macroscopic Dilemma and Evolution of States

The introduction of "almost macroscopically unique" states \(\mathcal{H}_{AMU}\) attempts to address the non-existence of cat-states by defining a subset of Hilbert space where macroscopic observables exhibit small variances. However, Schrödinger's evolution does not map \(\mathcal{H}_{AMU}\) to itself! For example, his equation for single free particles is readily solved and leads to \(\phi\)'s that spread out without limit. As they spread, their wave aspect eventually dominates their particle aspect. And since measurements of the particle's position then become macroscopically indeterminate, the particle is in a cat-state. Physicists' labs convert atomic superpositions to macro cat-states but with a twist. All measuring apparatuses are made of huge numbers of atoms, something on the order of Avocadro's famous number, so around \(10^{23}\). The original uncertainty spreads out into this mess, into what is often modeled as a "heat bath". Then the final macroscopic measurement is best described by a "density operator", a sort of trace of the rank one Hermitian operator \(\phi \cdot \phi^*\) in the Hilbert space of the measured value alone. When this density operator has more than one substantial eigenvalue, the result is a cat state.

4.4 Measuring Instruments and DNA Replication

Measuring instruments, such as Wilson's cloud chamber and photomultiplier tubes, create cat-states. The cloud chamber does this by being on the verge of a phase change where the passage of a single particle can create a bubble. A photomultiplier tube contains a sequence of electrodes held at steadily increasing charges each of which responds to being hit by electrons by emitting more electrons, e.g. 5 times as many. These are the lab's work horses but I want to propose that there is one other amplifier that is everywhere in our world: DNA. The essence of our lives is that one double-stranded set of human DNA produced by the coupling of 2 parents produces by replication some 30 trillion sets of the same DNA strands. Replication is not always 100% accurate but every cell has multiple repair mechanisms to keep the error rate small. But a multitude of injuries, radiation for example or poisonous chemicals, can cause mutations. Radiation, for example, can ionize a key atom but always with some probability. The result is a superposition of 2 states: an ionized state and an unionized state. This superposition is going to propagate creating a superposition of two distinct full strands of DNA, one mutated and one unmutated. In some cases, both will be viable but the two outcomes will differ in one base pair.

The speculative notion that DNA replication itself induces DNA cat-states opens a Pandora's box of questions. If mutations disrupt the sequence, the entire phenotype, the adult his or herself, can become a superposition. This raises challenging queries about when and where a collapse occurs.

I want to suggest that two things to speculate on. First, in the context of paleontology, were dinosaurs' senses developed enough to collapse the wave-form of a cat-dino? Or would the cat-dinos become cat-fossils and only when paleontologists dug them up was the form of the ancient dino finally chosen? Perhaps the shape of Mesozoic fauna was fixed in the 20th century when humans collapsed their wave-form.

Secondly, consider cancer. It is old folk medicine that cancer tends to strike grieving people with higher frequency than average and that positive attitudes sometimes create miracle remissions. If a cancer starts as a cat-cancer, then consciousness leads to the choice between two quantum states. And perhaps free will, if such a thing exists, can twist Born's rule by affecting the probabilities of the two states. I have never heard of an experiment where people try to affect the outcome of measuring a cat-state. We know that the role of consciousness in collapsing the wave form is still debated and uncertain, so the possibility that Born's rule may just be a default outcome that is subject to conscious manipulation should not be discarded out of hand.

In my book, I go further and propose a new model for dealing with measurements. I suggest that we are living in a "Bohr-bubble" in space-time in which we share information and memory across civilizations in order to maintain a stable macroscopic world. Many animals are like fellow-travelers who get sucked up into this bubble but whether dinosaurs or robots can also be included is not clear.

Conclusion

I have tried to shed light on subtle ideas at the intersection of AI, consciousness, and quantum mechanics. In the first section, I propose that robots with all the usual human abilities will be built fairly soon. Then the question of whether the robot has consciousness will have to be discussed. Personally, I have been amazed that the study of consciousness has gone from a theological and philosophical topic to a scientific one as I describe in the second section. But I believe there is an obstacle: the experience of "now" seems impossible to define scientifically as I discuss in the third section. This makes the role of consciousness in quantum theory very significant. The collapse of the wave-form is needed to prevent cat-states from disrupting the apparently deterministic macroscopic world. This is described in the fourth section together with the hypothesis that DNA replication is continually creating cat-states, for instance in cancer mutations. The interwoven complexities of quantum theory, measurement-induced cat-states, and the potential role of DNA replication as a measuring instrument challenge our understanding of reality and consciousness. As physicists grapple with the enigma of the quantum world, the implications for free will, the nature of measurements, and the collapse of cat-states lead back to the mysteries of consciousness.